吐槽 Part

这是一个男默女泪的故事

这天因为运动会小young可以多出一天完整的假期,不过为了赶在培训ddl前多一些进度,小young早早来到团队准备继续优化代码

进过一上午的代码理解,小young熟悉了 SEMM Tile 优化过程,于是计划先迁移运行代码,再试着尝试新接触的性能分析工具进行性能分析,最终进行进一步优化!

幻想顺利拿下培训题然后当选临近比赛的队长,小young不经“嘿嘿”傻笑,他没想到的是,噩梦才刚刚开始...

小young在迁移cuda代码到自己的Windows主机上编译时,出现了很多报错:

CUDA代码

// -*- coding: utf-8 -*-

#include <stdio.h>

#include <cuda_runtime.h>

#include <cassert>

#include <cstdlib>

#include <ctime>

#define CEIL_DIV(M, N) (((M) + (N)-1) / (N)) // 向下取整

typedef unsigned int uint;

void sgemm_naive_cpu(float *A, float *B, float *C, int M, int N, int K)

{

for (int x = 0; x < M; x++)

{

for (int y = 0; y < N; y++)

{

float sum = 0.0f;

for (int i = 0; i < K; i++)

{

sum += A[x * K + i] * B[i * N + y];

}

C[x * N + y] = sum;

}

}

}

// Template parameters:

// BM, BN, BK: dimensions of the block

// TM: number of threads per block

template <const int BM, const int BN, const int BK, const int TM, const int TN>

__global__ void __launch_bounds__((BM * BN) / (TM * TN), 1) sgemm_blocktiling_2d_kernel(float *A, float *B, float *C, int M, int N, int K)

{

volatile int dummy_sync = 0;

// Block index

// 获取处理的结果块的相对索引

const uint c_row = blockIdx.y;

const uint c_col = blockIdx.x;

// Thread index within the block

// 计算线程在线程块中的相对位置索引

// (BN / TN) 在N/宽方向上线程的数量

const uint thread_col = threadIdx.x % (BN / TN);

const uint thread_row = threadIdx.x / (BN / TN);

// Size of the 2D tile (block tile)

// tile中的结果数据量

const uint total_results_block_tile = BM * BN;

// Number of threads needed for a block tile

//tile中的线程数量/block中的线程数量

const uint number_threads_block_tile = total_results_block_tile / (TM * TN);

// assert(number_threads_block_tile == blockDim.x);

// Calculate the shared memory index that this thread is responsible for loading

// 理解为把线程全部装在BK*BM的数据块中,一个线程搬运一个数据

// 下面就是计算线程对应处理位置的相对索引

const uint inner_row_A = threadIdx.x / BK;

const uint inner_col_A = threadIdx.x % BK;

// Calculate the number of rows each thread block loads at a time

const uint stride_A = number_threads_block_tile / BK;

const uint inner_row_B = threadIdx.x / BN;

const uint inner_col_B = threadIdx.x % BN;

const uint stride_B = number_threads_block_tile / BN;

// Shared memory for matrix A and B

__shared__ float smem_A[BM * BK];

__shared__ float smem_B[BN * BK];

// Initialize thread results and register arrays

float thread_results[TM * TN] = {0.0};

float reg_m[TM] = {0.0};

float reg_n[TN] = {0.0};

// Adjust pointers for A, B, and C

// 计算A、B、C整组的指针位置,而不是线程处理的位置

// 也就是A、Bd

A += c_row * BM * K;

B += c_col * BN;

C += c_row * BM * N + c_col * BN;

// Outer loop

for (uint bk_idx = 0; bk_idx < K; bk_idx += BK) // 循环要执行K/BK次

{

// Load matrix A and B into shared memory

for (uint load_offset = 0; load_offset < BM; load_offset += stride_A)

{

smem_A[(inner_row_A + load_offset) * BK + inner_col_A] = A[(inner_row_A + load_offset) * K + inner_col_A];

}

for (uint load_offset = 0; load_offset < BK; load_offset += stride_B)

{

smem_B[(inner_row_B + load_offset) * BN + inner_col_B] = B[(inner_row_B + load_offset) * N + inner_col_B];

}

// Synchronize threads in the block

__syncthreads();

// advance the pointers

// 一小块处理完,开始移动指针处理下一小块

// 外层循环执行完之后,就完成整列和整行的处理

A += BK;

B += BK * N;

// Compute dot product

// 计算部分结果,并和前面的结果进行累加

// 外层循环bk_idx结束后就可以获得最终的块结果 - BM*BN

for (uint dot_idx = 0; dot_idx < BK; ++dot_idx)

{

// Load matrix A and B into registers

// 循环储存一列/TM个元素

// 这个As小矩阵是列有优先储存

for (uint i = 0; i < TM; i++)

{

// (thread_row * TM + i) * BK 移动指针到目标行

reg_m[i] = smem_A[(thread_row * TM + i) * BK + dot_idx];

}

// 循环储存一行/TN个元素

// 这个As小矩阵是列有优先储存

for (uint i = 0; i < TN; i++)

{

reg_n[i] = smem_B[dot_idx * BN + thread_col * TN + i];

}

// Compute multiplication and accumulate results

// 线程结算结果累加后即得到最终的结果子矩阵

// 外层 dot_idx 循环结束后,就可以得到一个TM*TN的结果矩阵

// 循环完后得到子结果矩阵的一部分结果

for (uint reg_idx_m = 0; reg_idx_m < TM; ++reg_idx_m)

{

for (uint reg_idx_n = 0; reg_idx_n < TN; ++reg_idx_n)

{

thread_results[reg_idx_m * TN + reg_idx_n] +=

reg_m[reg_idx_m] * reg_n[reg_idx_n];

}

}

}

// Synchronize threads in the block

__syncthreads();

}

// Write results back to matrix C

for (uint reg_idx_m = 0; reg_idx_m < TM; ++reg_idx_m)

{

for (uint reg_idx_n = 0; reg_idx_n < TN; ++reg_idx_n)

{

C[(thread_row * TM + reg_idx_m) * N + thread_col * TN + reg_idx_n] =

thread_results[reg_idx_m * TN + reg_idx_n];

}

}

}

void run_sgemm_blocktiling_2d(float *A, float *B, float *C, int m, int n, int k)

{

const uint BK = 8;

const uint TM = 8;

const uint TN = 8;

const uint BM = 64;

const uint BN = 64;

dim3 grid_size(CEIL_DIV(n, BN), CEIL_DIV(m, BM));

dim3 block_size((BM * BN) / (TM * TN));

sgemm_blocktiling_2d_kernel<BM, BN, BK, TM, TN>

<<<grid_size, block_size>>>(A, B, C, m, n, k);

}

void randomize_matrix(float *mat, int N)

{

srand(static_cast<unsigned int>(time(nullptr)));

for (int i = 0; i < N; i++)

{

mat[i] = static_cast<float>(rand() % 100);

}

}

int main(int argc, char *argv[])

{

if (argc != 4) {

printf("Usage: %s <m> <n> <k>\n", argv[0]);

return 1;

}

int m = atoi(argv[1]);

int n = atoi(argv[2]);

int k = atoi(argv[3]);

// Allocate memory for matrices

float *A, *B, *C, *C_ref;

float *d_A, *d_B, *d_C, *d_C_ref;

A = new float[m * k];

B = new float[k * n];

C = new float[m * n];

// save reference result

C_ref = new float[m * n];

// Initialize matrices

randomize_matrix(A, m * k);

randomize_matrix(B, k * n);

// Allocate device memory

cudaMalloc((void **)&d_A, m * k * sizeof(float));

cudaMalloc((void **)&d_B, k * n * sizeof(float));

cudaMalloc((void **)&d_C, m * n * sizeof(float));

cudaMalloc((void **)&d_C_ref, m * n * sizeof(float));

// Copy matrices to device

cudaMemcpy(d_A, A, m * k * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(d_B, B, k * n * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(d_C, C, m * n * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(d_C_ref, C_ref, m * n * sizeof(float), cudaMemcpyHostToDevice);

run_sgemm_blocktiling_2d(d_A, d_B, d_C, m, n, k);

// Copy result to host

cudaMemcpy(C, d_C, m * n * sizeof(float), cudaMemcpyDeviceToHost);

// Run reference sgemm

sgemm_naive_cpu(A, B, C_ref, m, n, k);

// Verify result

for (int i = 0; i < m * n; i++)

{

if (C[i] != C_ref[i])

{

printf("Error: mismatch at index %d, expected %f, got %f\n", i, C_ref[i], C[i]);

return 1;

}

}

printf("Success!\n");

// Calculate performance

cudaEvent_t start, stop;

cudaEventCreate(&start);

cudaEventCreate(&stop);

cudaEventRecord(start);

for (int i = 0; i < 100; i++)

{

run_sgemm_blocktiling_2d(d_A, d_B, d_C, m, n, k);

}

cudaEventRecord(stop);

cudaEventSynchronize(stop);

float elapsed_time = 0;

cudaEventElapsedTime(&elapsed_time, start, stop);

float avg_run_time = elapsed_time * 1000 / 100;

printf("Average run time: %f us\n", avg_run_time);

// Free device memory

cudaFree(d_A);

cudaFree(d_B);

cudaFree(d_C);

cudaFree(d_C_ref);

// Free host memory

delete[] A;

delete[] B;

delete[] C;

delete[] C_ref;

return 0;

}终端报错信息

PS D:\a_study\code_vs\CUDA-Related-develop\docs\11_gemm_optimize\01_tiled2d> nvcc -o kernel sgemm_tiled2d.cu

nvcc warning : Support for offline compilation for architectures prior to '<compute/sm/lto>_75' will be removed in a future release (Use -Wno-deprecated-gpu-targets to suppress warning).

sgemm_tiled2d.cu

sgemm_tiled2d.cu(1): warning C4819: 该文件包含不能在当前代码页(936)中表示的字符。请将该文件保存为 Unicode 格式以防止数据丢失

sgemm_tiled2d.cu(49): error: identifier "total_results_block_tile" is undefined

const uint number_threads_block_tile = total_results_block_tile / (TM * TN);

^

sgemm_tiled2d.cu(77): error: identifier "c_row" is undefined

A += c_row * BM * K;

^

sgemm_tiled2d.cu(119): error: identifier "thread_col" is undefined

reg_n[i] = smem_B[dot_idx * BN + thread_col * TN + i];

^

sgemm_tiled2d.cu(139): error: expected a declaration

for (uint reg_idx_m = 0; reg_idx_m < TM; ++reg_idx_m)

^

sgemm_tiled2d.cu(194): warning #12-D: parsing restarts here after previous syntax error

randomize_matrix(A, m * k);

^

Remark: The warnings can be suppressed with "-diag-suppress <warning-number>"

sgemm_tiled2d.cu(195): error: this declaration has no storage class or type specifier

randomize_matrix(B, k * n);

^

sgemm_tiled2d.cu(195): error: identifier "B" is undefined

randomize_matrix(B, k * n);

^

sgemm_tiled2d.cu(195): error: identifier "k" is undefined

randomize_matrix(B, k * n);

^

sgemm_tiled2d.cu(195): error: identifier "n" is undefined

randomize_matrix(B, k * n);

^

sgemm_tiled2d.cu(195): error: too many initializer values

randomize_matrix(B, k * n);

^

sgemm_tiled2d.cu(198): error: this declaration has no storage class or type specifier

cudaMalloc((void **)&d_A, m * k * sizeof(float));

^

sgemm_tiled2d.cu(198): error: declaration is incompatible with overloaded function "cudaMalloc" (declared at line 868 of C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.8\include\cuda_device_runtime_api.h)

cudaMalloc((void **)&d_A, m * k * sizeof(float));

^

sgemm_tiled2d.cu(198): error: identifier "d_A" is undefined

cudaMalloc((void **)&d_A, m * k * sizeof(float));

^

sgemm_tiled2d.cu(198): error: identifier "m" is undefined

cudaMalloc((void **)&d_A, m * k * sizeof(float));

^

sgemm_tiled2d.cu(198): error: too many initializer values

cudaMalloc((void **)&d_A, m * k * sizeof(float));

^

sgemm_tiled2d.cu(199): error: this declaration has no storage class or type specifier

cudaMalloc((void **)&d_B, k * n * sizeof(float));

^

sgemm_tiled2d.cu(199): error: variable "cudaMalloc" has already been defined (previous definition at line 198)

cudaMalloc((void **)&d_B, k * n * sizeof(float));

^

sgemm_tiled2d.cu(199): error: identifier "d_B" is undefined

cudaMalloc((void **)&d_B, k * n * sizeof(float));

^

sgemm_tiled2d.cu(199): error: too many initializer values

cudaMalloc((void **)&d_B, k * n * sizeof(float));

^

sgemm_tiled2d.cu(200): error: this declaration has no storage class or type specifier

cudaMalloc((void **)&d_C, m * n * sizeof(float));

^

sgemm_tiled2d.cu(200): error: variable "cudaMalloc" has already been defined (previous definition at line 198)

cudaMalloc((void **)&d_C, m * n * sizeof(float));

^

sgemm_tiled2d.cu(200): error: identifier "d_C" is undefined

cudaMalloc((void **)&d_C, m * n * sizeof(float));

^

sgemm_tiled2d.cu(200): error: too many initializer values

cudaMalloc((void **)&d_C, m * n * sizeof(float));

^

sgemm_tiled2d.cu(201): error: this declaration has no storage class or type specifier

cudaMalloc((void **)&d_C_ref, m * n * sizeof(float));

^

sgemm_tiled2d.cu(201): error: variable "cudaMalloc" has already been defined (previous definition at line 198)

cudaMalloc((void **)&d_C_ref, m * n * sizeof(float));

^

sgemm_tiled2d.cu(201): error: identifier "d_C_ref" is undefined

cudaMalloc((void **)&d_C_ref, m * n * sizeof(float));

^

sgemm_tiled2d.cu(201): error: too many initializer values

cudaMalloc((void **)&d_C_ref, m * n * sizeof(float));

^

sgemm_tiled2d.cu(204): error: this declaration has no storage class or type specifier

cudaMemcpy(d_A, A, m * k * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(204): error: declaration is incompatible with "cudaError_t __stdcall cudaMemcpy(void *, const void *, size_t, cudaMemcpyKind)" (declared at line 6852 of C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.8\include\cuda_runtime_api.h)

cudaMemcpy(d_A, A, m * k * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(204): error: identifier "A" is undefined

cudaMemcpy(d_A, A, m * k * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(204): error: too many initializer values

cudaMemcpy(d_A, A, m * k * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(205): error: this declaration has no storage class or type specifier

cudaMemcpy(d_B, B, k * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(205): error: variable "cudaMemcpy" has already been defined (previous definition at line 204)

cudaMemcpy(d_B, B, k * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(205): error: too many initializer values

cudaMemcpy(d_B, B, k * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(206): error: this declaration has no storage class or type specifier

cudaMemcpy(d_C, C, m * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(206): error: variable "cudaMemcpy" has already been defined (previous definition at line 204)

cudaMemcpy(d_C, C, m * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(206): error: identifier "C" is undefined

cudaMemcpy(d_C, C, m * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(206): error: too many initializer values

cudaMemcpy(d_C, C, m * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(207): error: this declaration has no storage class or type specifier

cudaMemcpy(d_C_ref, C_ref, m * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(207): error: variable "cudaMemcpy" has already been defined (previous definition at line 204)

cudaMemcpy(d_C_ref, C_ref, m * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(207): error: identifier "C_ref" is undefined

cudaMemcpy(d_C_ref, C_ref, m * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(207): error: too many initializer values

cudaMemcpy(d_C_ref, C_ref, m * n * sizeof(float), cudaMemcpyHostToDevice);

^

sgemm_tiled2d.cu(209): error: this declaration has no storage class or type specifier

run_sgemm_blocktiling_2d(d_A, d_B, d_C, m, n, k);

^

sgemm_tiled2d.cu(209): error: too many initializer values

run_sgemm_blocktiling_2d(d_A, d_B, d_C, m, n, k);

^

sgemm_tiled2d.cu(212): error: this declaration has no storage class or type specifier

cudaMemcpy(C, d_C, m * n * sizeof(float), cudaMemcpyDeviceToHost);

^

sgemm_tiled2d.cu(212): error: variable "cudaMemcpy" has already been defined (previous definition at line 204)

cudaMemcpy(C, d_C, m * n * sizeof(float), cudaMemcpyDeviceToHost);

^

sgemm_tiled2d.cu(212): error: too many initializer values

cudaMemcpy(C, d_C, m * n * sizeof(float), cudaMemcpyDeviceToHost);

^

sgemm_tiled2d.cu(215): error: this declaration has no storage class or type specifier

sgemm_naive_cpu(A, B, C_ref, m, n, k);

^

sgemm_tiled2d.cu(215): error: declaration is incompatible with "void sgemm_naive_cpu(float *, float *, float *, int, int, int)" (declared at line 12)

sgemm_naive_cpu(A, B, C_ref, m, n, k);

^

sgemm_tiled2d.cu(215): error: too many initializer values

sgemm_naive_cpu(A, B, C_ref, m, n, k);

^

sgemm_tiled2d.cu(218): error: expected a declaration

for (int i = 0; i < m * n; i++)

^

sgemm_tiled2d.cu(227): warning #12-D: parsing restarts here after previous syntax error

printf("Success!\n");

^

sgemm_tiled2d.cu(230): error: this declaration has no storage class or type specifier

cudaEventCreate(&start);

^

sgemm_tiled2d.cu(230): error: declaration is incompatible with overloaded function "cudaEventCreate" (declared at line 3474 of C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.8\include\cuda_runtime_api.h)

cudaEventCreate(&start);

^

sgemm_tiled2d.cu(230): error: a value of type "cudaEvent_t *" (aka "CUevent_st **") cannot be used to initialize an entity of type "int"

cudaEventCreate(&start);

^

sgemm_tiled2d.cu(231): error: this declaration has no storage class or type specifier

cudaEventCreate(&stop);

^

sgemm_tiled2d.cu(231): error: variable "cudaEventCreate" has already been defined (previous definition at line 230)

cudaEventCreate(&stop);

^

sgemm_tiled2d.cu(231): error: a value of type "cudaEvent_t *" (aka "CUevent_st **") cannot be used to initialize an entity of type "int"

cudaEventCreate(&stop);

^

sgemm_tiled2d.cu(233): error: this declaration has no storage class or type specifier

cudaEventRecord(start);

^

sgemm_tiled2d.cu(233): error: declaration is incompatible with "cudaError_t __stdcall cudaEventRecord(cudaEvent_t, cudaStream_t)" (declared at line 3552 of C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.8\include\cuda_runtime_api.h)

cudaEventRecord(start);

^

sgemm_tiled2d.cu(233): error: a value of type "cudaEvent_t" (aka "CUevent_st *") cannot be used to initialize an entity of type "int"

cudaEventRecord(start);

^

sgemm_tiled2d.cu(234): error: expected a declaration

for (int i = 0; i < 100; i++)

^

sgemm_tiled2d.cu(238): warning #12-D: parsing restarts here after previous syntax error

cudaEventRecord(stop);

^

sgemm_tiled2d.cu(239): error: this declaration has no storage class or type specifier

cudaEventSynchronize(stop);

^

sgemm_tiled2d.cu(239): error: declaration is incompatible with "cudaError_t __stdcall cudaEventSynchronize(cudaEvent_t)" (declared at line 3664 of C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.8\include\cuda_runtime_api.h)

cudaEventSynchronize(stop);

^

sgemm_tiled2d.cu(239): error: a value of type "cudaEvent_t" (aka "CUevent_st *") cannot be used to initialize an entity of type "int"

cudaEventSynchronize(stop);

^

sgemm_tiled2d.cu(241): error: this declaration has no storage class or type specifier

cudaEventElapsedTime(&elapsed_time, start, stop);

^

sgemm_tiled2d.cu(241): error: declaration is incompatible with "cudaError_t __stdcall cudaEventElapsedTime(float *, cudaEvent_t, cudaEvent_t)" (declared at line 3739 of C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.8\include\cuda_runtime_api.h)

cudaEventElapsedTime(&elapsed_time, start, stop);

^

sgemm_tiled2d.cu(241): error: too many initializer values

cudaEventElapsedTime(&elapsed_time, start, stop);

^

sgemm_tiled2d.cu(241): error: a value of type "float *" cannot be used to initialize an entity of type "int"

cudaEventElapsedTime(&elapsed_time, start, stop);

^

sgemm_tiled2d.cu(243): error: this declaration has no storage class or type specifier

printf("Average run time: %f us\n", avg_run_time);

^

sgemm_tiled2d.cu(243): error: declaration is incompatible with "int __cdecl printf(const char *, ...)" (declared at line 950 of C:\Program Files (x86)\Windows Kits\10\include\10.0.19041.0\ucrt\stdio.h)

printf("Average run time: %f us\n", avg_run_time);

^

sgemm_tiled2d.cu(243): error: too many initializer values

printf("Average run time: %f us\n", avg_run_time);

^

sgemm_tiled2d.cu(243): error: a value of type "const char *" cannot be used to initialize an entity of type "int"

printf("Average run time: %f us\n", avg_run_time);

^

sgemm_tiled2d.cu(246): error: this declaration has no storage class or type specifier

cudaFree(d_A);

^

sgemm_tiled2d.cu(246): error: declaration is incompatible with "cudaError_t __stdcall cudaFree(void *)" (declared at line 5647 of C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.8\include\cuda_runtime_api.h)

cudaFree(d_A);

^

sgemm_tiled2d.cu(247): error: this declaration has no storage class or type specifier

cudaFree(d_B);

^

sgemm_tiled2d.cu(247): error: variable "cudaFree" has already been defined (previous definition at line 246)

cudaFree(d_B);

^

sgemm_tiled2d.cu(248): error: this declaration has no storage class or type specifier

cudaFree(d_C);

^

sgemm_tiled2d.cu(248): error: variable "cudaFree" has already been defined (previous definition at line 246)

cudaFree(d_C);

^

sgemm_tiled2d.cu(249): error: this declaration has no storage class or type specifier

cudaFree(d_C_ref);

^

sgemm_tiled2d.cu(249): error: variable "cudaFree" has already been defined (previous definition at line 246)

cudaFree(d_C_ref);

^

sgemm_tiled2d.cu(252): error: expected a declaration

delete[] A;

^

sgemm_tiled2d.cu(253): error: expected a declaration

delete[] B;

^

sgemm_tiled2d.cu(254): error: expected a declaration

delete[] C;

^

sgemm_tiled2d.cu(255): error: expected a declaration

sgemm_tiled2d.cu(255): error: expected a declaration

delete[] C_ref;

delete[] C_ref;

^

sgemm_tiled2d.cu(257): error: expected a declaration

return 0;

^

sgemm_tiled2d.cu(258): error: expected a declaration

}

^

85 errors detected in the compilation of "sgemm_tiled2d.cu". 报错

查看报错信息,小young发现很多都是关于变量未声明和函数重复定义的问题,但代码在集群机器上都能顺利跑上,说明变量和函数声明定义方面一定不会出现问题的

小young猜想是否是编译器出现了问题,导致无法识别 const uint 变量名

于是小young依次尝试了

- typedef unsigned int uint;

- 替换 const uint -> int

- 重装 CUDA ToolKit

结果发现问题仍然没有解决,此时时间已经来到了晚上

在于学长的交流后,小young决定将驱动作为突破口,准备去查看GPU硬件与CUDA ToolKit是否识别(不过并没有抱有太大希望,因为几个月前在本机上还有运行过cuda程序,说明驱动和版本应该是兼容的)

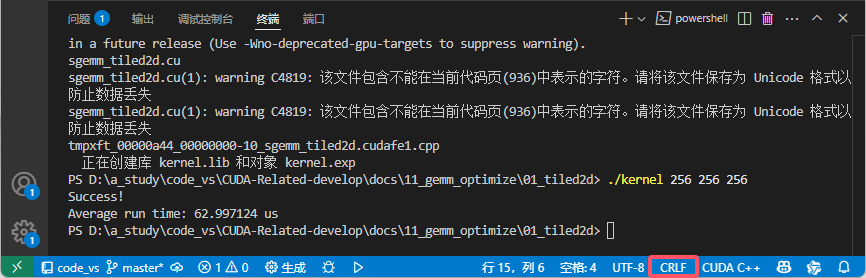

此时小young顺着AI提示的修改编码格式(这个是为了解决 sgemm_tiled2d.cu(1): warning C4819: 该文件包含不能在当前代码页(936)中表示的字符。请将该文件保存为 Unicode 格式以防止数据丢失 报错)的位置发现还有一个LF行尾序列的设置

本着“玩玩就玩玩,反正已经这样了,顶多更坏”的原则,小young尝试修改行尾序列 LF -> CRLF并重新编译

然后发现…编译通过了…

正文 Part

CRLF 与 LF

CRLF 和 LF 是两种不同的行尾序列,用于表示文本中的换行

其中 CRLF 表示 ‘/r/n‘ 代表换行, LF 表示 ‘/n’ 代表换行

不同的行尾序列格式会产生不同的文本读取方式

AI explanati

CRLF 和 LF 是两种不同的行尾序列,用于表示文本中的换行。以下是它们的具体解释:

- CRLF

- 含义:CRLF 是 “Carriage Return Line Feed” 的缩写,即回车符(Carriage Return,\r)和换行符(Line Feed,\n)的组合。在 Windows 系统中,文本文件通常使用 CRLF 来表示一行的结束。

- 作用:回车符的作用是将光标移到当前行的开头,换行符则将光标移到下一行。两者结合使用,实现了在文本中正确地换行和定位光标。

- LF

- 含义:LF 仅包含换行符(\n)。在 Unix、Linux 以及 macOS 等操作系统中,通常使用 LF 作为行尾序列。

- 作用:它告诉文本处理程序或操作系统,当前行已结束,应将光标移到下一行的开头,以继续显示或处理后续内容。

在不同的操作系统和文本处理环境中,正确识别和处理行尾序列非常重要,否则可能会导致文本显示异常、程序读取文件错误等问题。例如,当在 Windows 系统中打开一个使用 LF 作为行尾序列的文本文件时,可能会发现换行显示不正确;而在 Unix 或 Linux 系统中,如果文本文件使用了 CRLF 作为行尾序列,某些程序可能会将其视为错误的格式。

编译报错出现的原因

Windows 系统是 CRLF 行尾序列格式,而 clone 下来的是基于 Linux 系统开发的代码,也就是采用了 LF 行尾序列格式,冲突的格式导致主机不能正确读取内容,出现众多 xx is undefined 以及 function is already declared 报错

再次嫌弃 Windows 系统…

解决方案

修改格式 LF -> CRLF

END

希望没有下一个在这里栽跟头的人了

附:解决 issue 途中学到的 CUDA ToolKit 更新方式